AI: To err is human, but should we forgive?

A non-technical discussion on using generative AI in knowledge systems

Earlier in the year Stanford university released research into the performance of standard AI models (Large Language Models - LLMs) dealing with legal queries. They tested over 200,000 questions and found that OpenAI’s GPT 3.5 model had an average hallucination rate of 69% (they tested other models, which fared worse). What this boils down to is if you ask ChatGPT (GPT3.5 version) a legal query it’s more than likely the answer contains a hallucination, e.g. some fact or statement that is entirely fictional - obviously not ideal for legal questions.

Since then, there has been a fairly unanimous response from legal-tech of: “yes, of course it’s terrible”. Most people in legal tech (and probably in any domain thinking about how generative AI can be utilised) are not recommending using ChatGPT off the shelf for expert knowledge and advice systems.

This article is going to be a tech-free discussion of strengths, limitations and work arounds of LLMs for legal queries, and a look at product decisions that need consideration working in this space.

The strengths of Large Language Models

I find the easiest, non-technical, way to reason about LLMs is to think of them as a person. LLMs have two core, but distinct, strengths, which are analogous with how we are taught as children:

Language comprehension - A standard part of English language curriculum in schools is language comprehension. We are probably all familiar with comprehension exercises and tests we had to do at school: you get given a piece of text/prose you haven’t seen before and have to answer questions about the text.

Knowledge - Taught and assessed across a range of subjects (for example history lessons). In schools, knowledge is often taught via language (spoken and written) and so knowledge acquisition is quite closely tied to language comprehension.

When you ask a person, or an LLM, a question, both of these abilities are put to use - firstly, comprehension is needed to understand the question being asked and secondly, knowledge to inform the answer of any relevant factual elements.

To err is human

If you asked me who was the first man on the moon I’d answer fairly immediately, Neil Armstrong.

This question relies on both my comprehension and knowledge. I have developed knowledge over the years, and over that time, the knowledge I’ve been most exposed to is the knowledge I’m most confident in (Neil Armstrong being the first man on the moon is widely referenced and mentioned in general knowledge, popular culture etc, so it a fact most of us can recall pretty instantly).

A similar concept applies to LLMs, they have learnt a huge breadth of general knowledge (basically having read most of the internet). Having been trained on such a broad range of subjects means that they have fewer areas of weakness than a person, but they will have consumed a lot more content on any given subject, which means an increased chance in variance in the topics.

As an example, if I watched my favourite movie 100 times, it would re-enforce everything I know about the movie and I’d probably be able to tell you every single detail pretty accurately. Where as if I watched 100 games of rugby (which I probably have), there is a lot more variation - I’d be able to describe general principles and rules quite well, but I might struggle to remember precise details, who played when, who scored etc. The latter is closer to LLM training - they haven’t been trained on a single-point-of-truth law textbook 1,000 times, they’ve been trained on thousands of different texts, all of which will be slightly different in wording and content (not to mention difference in laws in different jurisdictions, changing laws over time and the etc).

I’ve watched a lot of Welsh rugby, and if you asked me who were the wingers in the Welsh 2005 Grand Slam squad, I’d say definitely Shane Williams, and .. Rhys Williams, or maybe Mark Taylor, or maybe Dafydd James, or maybe Mark Jones? I know these are all Welsh wingers from about that era, and I know they all look like the correct answer (if you told me it was any two of those, I’d believe you at face value) - but I can’t remember for sure.

This is the challenge that LLMs face, when it comes to generating the answers, there may be several candidates for any given word that look correct. The key difference being LLMs don’t think they are unsure, or say that they can’t quite remember - they would get to the first name in the list and say that is correct with complete confidence. That is to say, rather than recognising that one of the names in that list is correct, it assumes all names in the list are correct answers and interchangable.

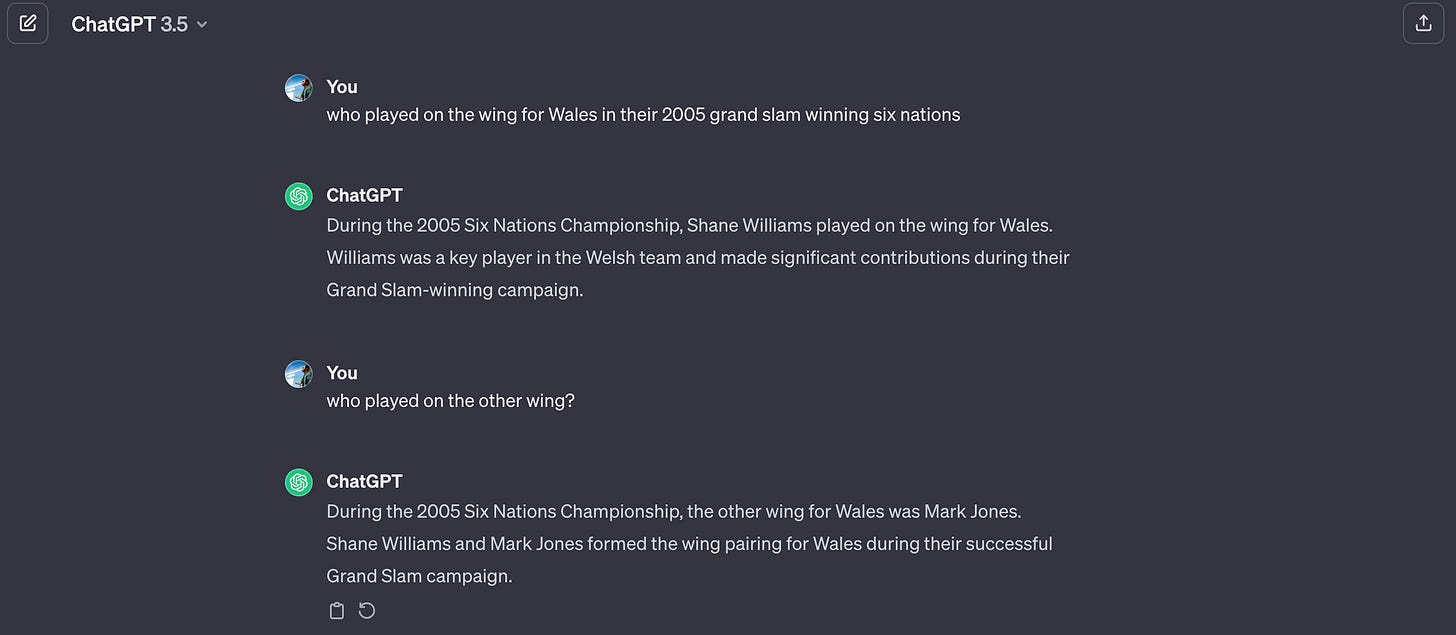

As a wonderful turn of events (wonderful for the sake of my example), I asked ChatGPT that question and it seems they had the same dilemma as I did, and guessed with complete confidence (and got it wrong):

(It locked in on Shane Williams straight away, same as I did, but eventually settled for Mark Jones on the other wing, which is incorrect, having looked it up to confirm the answer, it was Rhys Williams until he got injured and was replaced by Mark Taylor)

A little learning is a dangerous thing

So, knowledge, whether it be an LLM or a human, is fallible. It can still be useful in all sorts of circumstances, especially when it’s not critical or not complex, but any of us can misremember or just be plain wrong.

Language comprehension, on the other hand, has more interesting potential. Going back to our rugby example, if you asked me the same question but also provided me a write up of the 2005 tournament, I’d be confident I’d get the answer 100%.

This same idea applies to LLMs. If you provide accurate reference information along with a question, then the chance of hallucination reduces a lot - the LLM is no longer trying to remember the answer, but it simply has to rely on its language comprehension ability to understand the question and provided reference to answer. As we have discussed, the knowledge is where the hallucinations come from, so if we can remove that aspect as much as possible, then the risk reduces along with it.

The other advantage of such an approach is it means we (humans or LLMs) can also provide references to our sources. Earlier I told you Neil Armstrong was the first man on the moon, but I have no idea where I got that information. I will have heard/read the fact many places over the years, but I couldn’t point you to a single place where I learnt that information. If you gave me a text book to answer question, then of course I’d be able to tell you the exact reference (the name and page number of the text book, even quote the textbook verbatim).

How does all this help legal AI tools?

To go back to the start, and the woeful performance of off-the-shelf LLMs - the reason a lot of the response was “we know!” was because a lot of people building knowledge tools around LLMs (in legal and most other domains) aren’t relying on LLMs like this, but essentially use a comprehension based approach similar to what has been described above.

The method is referred to as RAG - Retrieval Augmented Generation - and in it’s simplest form works as follows:

The user asks a question

The platform then looks up its knowledge bank for relevant information to the question

The relevant information and user’s question are then passed to an LLM with an instruction along the lines of read this and answer this question, if the answer isn’t in the text then say “I don’t know”.

As already covered this approach is nice because:

It reduces the dependency on LLM “knowledge” and therefor reduces the likelihood of hallucinations.

Because we have already narrowed it down to a few pieces of source text, we can provide source references for verification and further reading to the user.

Great, so it all works perfectly?

Of course, no, this is still a fallible solution. We can’t guarantee that the LLM isn’t going to hallucinate or just decide to make stuff up in answering the question. These are all working with natural language instructions, so of course there is room for error (or creativity).

But even if it does hallucinate, if it provides references for verification, so that’s all ok then, right?

Well, it’s a good start, but still not foolproof.

Prior to AI there will have been millions of people googling and taking legal/commercial/medical advice on the first website that comes up.

When ChatGPT first launched, there will have been millions of people asking and taking legal/commercial/medical advice based on the responses.

In many cases people are trusting of things that sound right. In some cases, people are lazy. Sometimes people just want some vague indication that they are on the right track.

This is all to say, trusting human end-users to double check and verify the answer still has elements of risk to it, because they just might not do it.

The issue I have with this, from a product point-of-view, is it creates a burden of doubt - that is, for critical systems that require accuracy, any initial efficiencies made by having AI generated answers are lost because the onus then is put back on to the user to make sure the system is correct. This increases the cognitive load and effort that the user has to put in using the system.

Whilst that decision to verify source info is very much the discretion of the end-user, the best product experience is that they get the right answer, without doubt, and can trust the system that the answer is right.

Can we do better?

Yes, we could not use LLM generated answers!

Whilst that sounds counter intuitive, the ideal knowledge system with LLM capabilities is one that doesn’t require an LLM to generate answers.

The above described RAG system can be improved by having what is often referred to as a human-in-the-loop. This means we introduce expert human oversight to review generated content. Of course, most platforms wouldn’t want to do that in realtime, as it would make the platform painfully slow and wouldn’t scale well. However, if you can have human oversight at a high level, retrospectively, you can introduce the concept of verified answers. These might be human written, or LLM generated responses that are later reviewed and approved by relevant domain experts.

As you build a set of verified answers, you should slowly be able to start pushing more and more questions to pre-approved, expert verified information and the dependency on the LLM to generate answers reduces (and along with it the risk). The process now looks like this:

The user asks a question

The platform compares the question to the existing, verified questions previously asked - if there is a good match, return the verified answer to the user

If no matching answer, continue the usual process of searching for source reference and ask the LLM to generate an answer - Add this new question/answer to the list of answers for our expert team to review and verify (obviously this step would check accuracy as well as ensure no personal information is included).

It’s easy to see the advantage of this approach if we frame it in the context of a human LLM. Most of us have a reasonable level of comprehension, and would be fine answering questions in an open-book test in a range of domains, but for very specific expert subjects, such as law or medicine, text references can be complex and take context specific knowledge to comprehend. Given advanced subject documentation and a question, its quite possible that a human, or an LLM, might not get it quite right 100% of the time.

However, if, as with this new process, the task for the LLM was not answer the question, but rather compare similarity of the question asked against existing questions, it’d be significantly more straight forward.

Conclusion

So that’s a quick run through, at a very high, non-technical level, of the way some people are utilising LLMs in knowledge based platforms, and how we can work to mitigate a lot of the hallucination risks with vanilla off-the-shelf LLMs as observed in the original Stanford research.

Of course, a caveat to this is that to remove the dependency on the LLM knowledge, you need to supplement it with data/knowledge of your own.

As ever, data is king.