OpenAI vs the internet (and NYT)

Thoughts on the news that New York Times is suing OpenAI and Microsoft

tldr;

🚨 Over Christmas it was announced that New York Times is suing OpenAI and Microsoft over IP and copyright claims regarding their data being used to train their ChatGPT LLM models.

🌍 GenAI (ChatGPT or others) offers an alternative UI and interaction model for the web, and has interesting implications for next phase of the internet

The news itself is pretty well reported already, but there are a few interesting questions coming out as part of this discussion which are interesting to think through.

The NYT case is pretty straight forward: OpenAI is looking like a trillion dollar company, and their product has been made possible by huge data sets of content. It seems reasonable that anyone who owns a sizeable chunk of the content may want to stake their claim to some of those $$$.

Furthermore, and more interestingly, the arrival of GenAI and ChatGPT has the potential to change the way we think about and interact with the web, meaning not only are GenAI platforms like ChatGPT huge businesses, they also create direct competition for businesses who have built their revenue around content creation and publication, like NYT.

OpenAI & other people’s data

The first interesting point to note in this discussion is that OpenAI doesn’t store, or directly use in real-time, any NYT data.

The simplest way to reason about GenAI models is to think of them a bit like a person. ChatGPT was trained on a tonne of data, including the NYT’s data, which is a bit like them learning from reading the content. ChatGPT doesn’t store the NYT data in a database, or have it verbatim - rather during the training process it read and learnt, much like a person might read a copy of the paper and learn things from it.

OpenAI, just like a regular reader, isn’t walking around with every copy of the NYT paper to hand, ready to open up and quote verbatim to answer questions.

Obviously, scale is a factor here - a one trillion dollar company is clearly different from an individual. However, even if you started a company that provided industry insights and info based on NYT articles and became huge, it probably still wouldn’t be a threat to the NYT (after all, similar firms do already exist in strategy/consultancy firms offering Horizon Scanning - e.g. consuming news and industry updates to offer domain knowledge expertise and advice). This is because a key distinction here, is that the bigger issue for NYT is that ChatGPT can now replicate very similar content to NYT articles, on demand, and in doing so, has created a significant competitor which has the power to make a huge dent in the NYT’s current revenue model.

OpenAI paid publisher deals

Possibly as a pre-emptive measure, OpenAI have recently announced an 8 figure deal to access Axel Springer’s data set (they own several magazine publications, including Business Insider). The deal provides access to their archive (for further training of models) as well as live access to their latest data.

It’s the second half of this arrangement that is the most interesting to unpick.

Already, it’s fairly easy to see that ChatGPT could be something of a threat to traditional search engines (such as Google) and content platforms (such as NYT). Previously, if you were on the internet looking for an answer the process was something like this:

Enter your question (or a search-friendly version of the question) to google

Try to work out which (if any) of the return search results looked like they might contain the answer you were after

Click through and read the article, hopefully working out the answer

Google then started simplifying that further with its “featured snippets” - where they would display snippets of text from the results that they thought answered the question you were after, so users could potentially get the answer from a website, without even visiting (and again, reducing click-through and users on websites, and less monetisation opportunities for website hosts).

With ChatGPT the process is even simpler, you enter the question and get an immediate answer within the app. Whilst this ease and speed of getting to an answer is far better, there are two large(ish) hurdles for ChatGPT:

Credibility/Source verification - ChatGPT provides a text only answer, without references to where it learnt that.

Real time answers - ChatGPT only has knowledge based on its training data, which is currently a couple years behind.

Now, for the majority of users, the reality is the first issue isn’t really a deal breaker. People might not like to acknowledge it, but that is broadly the way the internet works. After all, how many of us verify sources or credibility of websites we land on following a Google search? Any idiot can create a website and publish information without quality control or accuracy checks. Being able to click through from Google isn’t a great measure of credibility (sure, there is some element of social proofing baked into the way the Google algorithm works, but lots of people can be wrong together).

The second point, regarding up-to-date data, is the more interesting part of the Springer deal. The real-time access means they will be able to answer questions using the ChatGPT AI, whilst also pulling in real-time (credible) data as a live source to get up-to-date answers. The Springer real-time data feed will mean they can start to tackle both of these hurdles in terms of providing source references (as ChatGPT will know where it got it’s specific answer from) as well as provide answers to current questions (to jump back to the previous analogy of ChatGPT not carrying around every copy of the NYT, they will effectively now be able to carry around every copy of Springer publications to pull info from, quote directly and provide a link to the source articles referenced).

What does this mean for the web?

The idea of ChatGPT as a traditional-search-alternative has some interesting implications for the web. If they strike a few more publisher-data deals (and current feeling is that NYT won’t win this case, but settle with a deal), then ChatGPT will increasingly look like a one-stop destination for people looking for answers who’d traditionally Google it. This means that ChatGPT becomes the gatekeeper for content - you either get a generic answer from ChatGPT AI and trust the all knowing AI, or you can get an answer generated based on verified and up-to-date sources from the chosen partners (currently Springer), along with links back to the original articles. This kind of gatekeeper/limited-partner-access to the worlds data + web would also essentially mean organic traffic to hobbyist sites, start-ups, community forums etc would massively drop off with this shift in platform.

As an example, I run a food website (see what I said earlier about any idiot can build a website) that currently has a fairly modest 4-5k clicks a month from organic Google search traffic. There hasn’t been marketing or social media campaigns, it’s just indexed on the web, and if people happen to search for specific topics and haven’t found the results elsewhere, they might stumble upon my site - or anyone else’s for that matter. This kind of site, and these kind of numbers are obviously of no interest to OpenAI, but if users moved away from the traditional (vaguely) open and democratic web-crawled search to a ChatGPT type solution then you’d assume my site traffic (along with millions of others) starts to move towards zero.

Meanwhile, a serious competitor to OpenAI is Google, and undoubtedly they will also be looking to work out how search and AI will work together in the future (and more importantly, how they can still get that ad revenue!). It’s pretty easy to imagine a couple of iterations of possible GenAI+Search solutions:

An enhanced version of the current “snippets” - Google show their search results as they do now, but also include AI generated answer snippets to the searched question based only on the content of the site

A more thorough overhaul to an “AI First” approach to search - Google could still fetch and displays search results, but rather than having the search results as primary feature on the page, they list them in a sidebar for reference and the primary interactions play out in a conversation between user and Google. Maybe the chat shows a generated answer based on the content on the first 10 results from Google search, and users can then further interrogate, or include/exclude search result sites to get answers generated from specific sites (and click through to the websites if they really wanted to).

The latter seems like a possible outcome and is very close to what Google’s Bard currently does. Google could integrate the two platforms, making Bard essentially the default search platform, but more prominently show search results and content used to generated the answer.

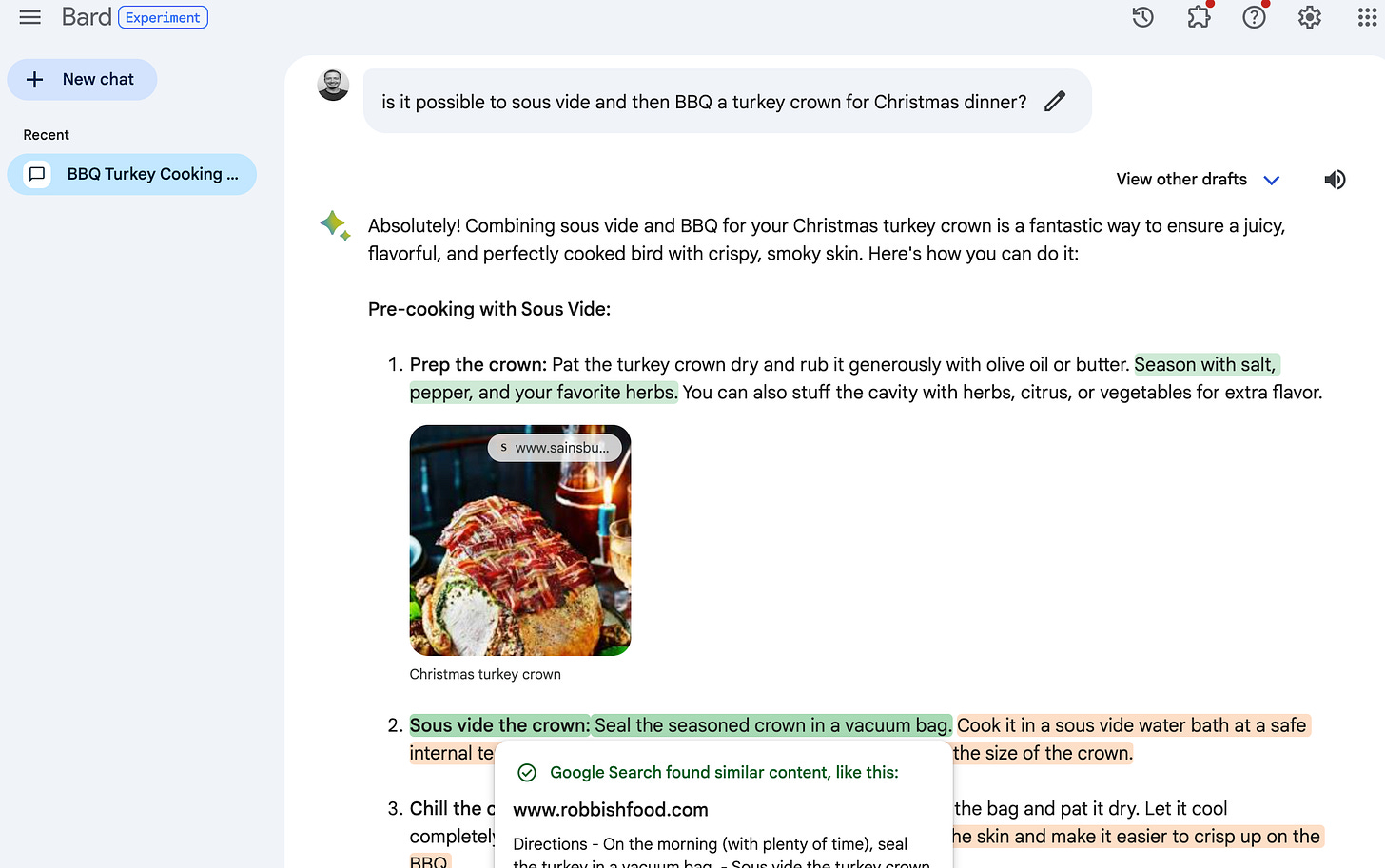

The image below shows the current Bard experience (Google’s ChatGPT equivalent), it works as follows:

User asks a question

Bard generates an answer using it’s GenAI

Google can then perform a quality-control/cross-reference check on the generated answer: If Google finds similar matches in real websites, the relevant lines are highlighted with a link to the similar website

For the cross-reference check, Google isn’t currently using those sites to generate the answer, but rather it is retrospectively checking the generated answer against search results (the cross-reference link in the Bard screenshot below is to my site, and Google didn’t generate this answer directly from my live site).

It’s easy to see how an iteration of the above example could be a future of Google search. It even feels as though it could offer a different ad-monetisation model for Google - advertising links could be embedded into the generated content, making them much more specific and relevant to sentences/guidance (ad payments then might have to become more like royalties for sites used to generate the answer, but that might also be a more favourable publisher agreement for smaller publishers anyway)

If Google wanted to start answering questions based on live websites in search results, they’d need to have it as an opt-in feature (e.g. consent to Google using their data for real-time AI responses). The upside of this would be all sites, of any size, still have the option of being discoverable on the web as they are now, as well as having the option to opt-in to be included in generated AI responses. The downside of course being the click through rate would still take a significant hit with AI summarising answers directly in Google. Of course, they’d have to provide an opt-out option, but no doubt Google would over time start to penalise sites opting out and push them down the search rankings making it not-really-optional anyway.

And this is what NYT is staring down right now. As well as wanting a piece of an awfully large looking pie - they want to have their content and IP referenced and as much as possible, keep those customers on their platform reading their content, where they can monetise and up-sell however they like.

So is it the end of the web, as we know it?

I am actually optimistic on GenAI and how this all plays out as the next evolution of the internet and platforms in general, and how these discussions conclude.

Whatever happens, it will be interesting to see how all these threads come together and where people land on what is acceptable fair use, as well as how the web and its many independent creators, thinkers and builders evolve.